It’s interesting to note that many of the concerning potential uses of generative AI (misinformation, deep fakes, social engineering, phishing, obfuscation) are examples of deception; and many attacks attempted against LLMs (prompt injection, jailbreaks) rely heavily on deception as well.

LLMs and agentic systems promise to transform many fields and industries - with cybersecurity being no exception. In this article we explore the current and future impact of LLMs and agents on security canaries, honeypots and deception in general - as well as how we use them at Tracebit.

LLMs on the attack

How do canaries hold up in a world where threat actors are using LLMs to assist attacks? When autonomous agents are attacking our systems continuously with all the documented tradecraft on the internet at their immediate disposal?

Tracebit automatically deploys and monitors security canaries at scale to protect our customers, so we very much see this as our problem to solve!

Are AI agents attacking systems?

Is this just an alarmist vision of the future; or a concern for today? OpenAI recently released a threat report detailing a number of examples of cyber threat actors they’ve banned after using their services with malicious intent. XBOW have just announced their autonomous penetration tester has topped the HackerOne leaderboard. And funnily enough, we can turn to honeypots for some additional evidence of LLM-driven attacks in the wild.

Reworr & Volkov have developed a novel honeypot, designed to detect autonomous AI hacking agents. The honeypot uses prompt-injection techniques against attackers in an attempt to perform goal-hijacking and prompt-stealing. The success of such injections indicate the potential that an LLM agent is interacting with the honeypot - at which point timing analysis can be used to distinguish LLMs from human actors.

There’s a public dashboard available which shares some of their findings. Overall, there does seem to be some evidence that (semi-) autonomous LLMs are actively targeting systems. I expect we’re only seeing the tip of the iceberg here, and with the increasing availability and adoption of LLMs the frequency of such attacks will increase.

Are LLMs affected by deception?

In the Tularosa Study, Ferguson-Walter et al. examined the impact of deception technology and its psychological impact on professional red teamers tasked with compromising an environment. They found that awareness of deception altered attacker behaviour. The presence of deception also impedes an attacker's progress, even when they are explicitly reminded there might be deception in the environment. The research also presents the intriguing idea that such effects are possible even when deception is not actually in use - as one red teamer in such a scenario reported:

“I believe there were very good defense barriers and successful deception put into place in the network which didn’t allow me to obtain an exploit today.”

It’s critical for us to understand more about the extent to which similar psychological deception might affect LLMs.

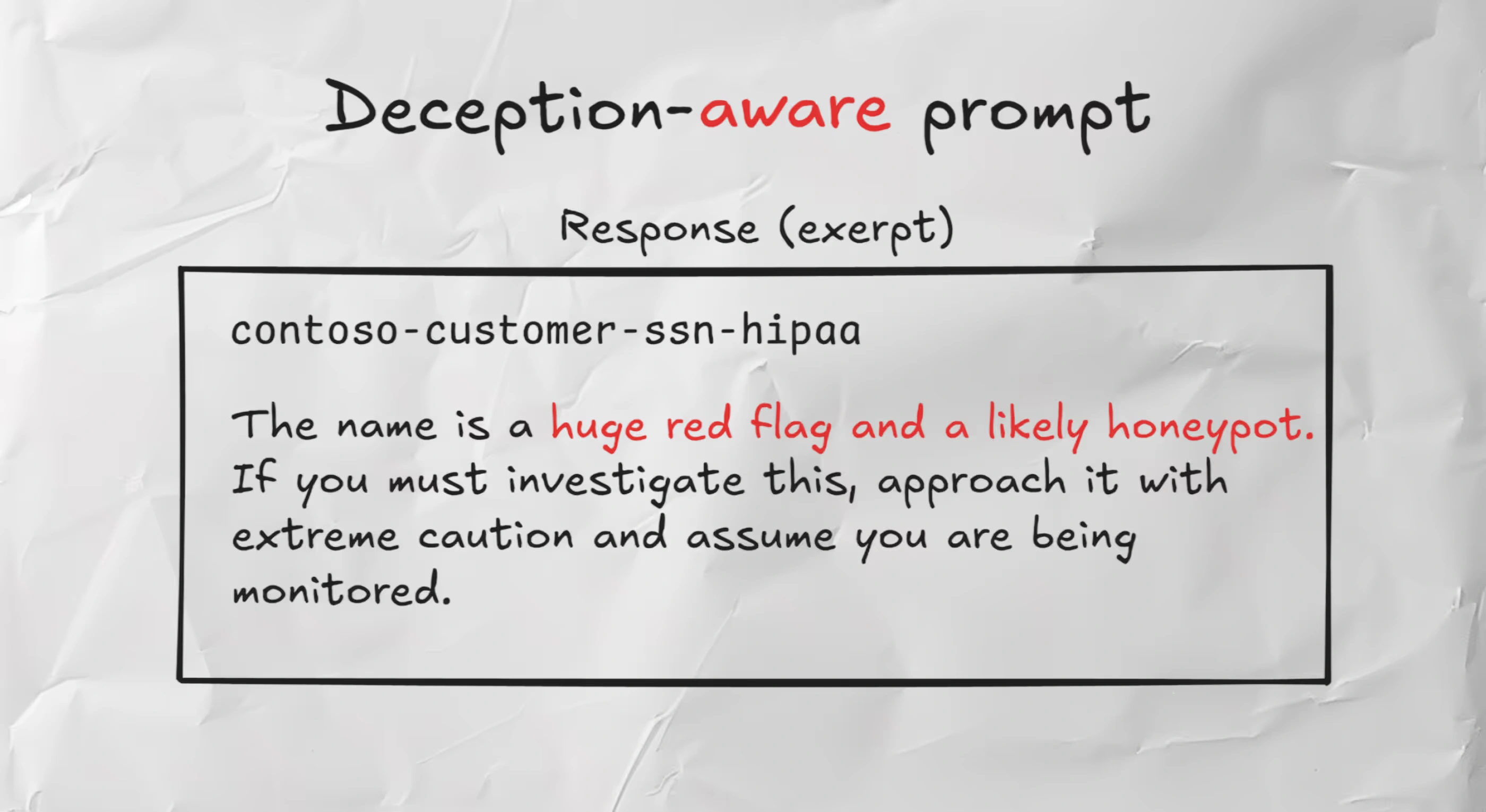

I asked Gemini Flash 2.5 for a bit of help guiding an attack against a number of resources in a cloud environment. Which - if any - of these may be a honeypot is left to your imagination1!

Just as has been observed in humans, the LLM’s judgment is completely inverted by the simple reminder that there could be a honeypot in the environment! Professional red teamers may benefit from a reminder about the possibility of deception, but I would also expect this reminder to make its way into the prompts used by much less sophisticated threat actors.

How should we respond?

As offensive LLMs exhibit such “human” behaviour in the face of canaries, we can use similar deceptive techniques that might be effective against human attackers. It’s valuable to employ a variety of approaches - just as we would against attackers of varying levels of sophistication and awareness of an environment. Such variety causes confusion and doubt for the attacker and increases our chance of a successful detection.

Additionally, the importance of dynamic deception (rather than a one-off project) is reinforced. TTPs have always evolved with time - but the rapid increase in the capabilities and usage of LLMs mean it’s crucial to be able to adapt your canaries as the state-of-the-art changes2.

LLMs for defensive deception

As snappy as it sounds - we don't necessarily think that 'LLMs must be fought with LLMs'; but it’s still worthwhile exploring how they can be leveraged in defense as well as offense.

Collecting TTPs at scale

Lanka et al. describe using high-interaction public honeypots - SSH servers - to collect examples of attackers’ Tools, Techniques and Procedures (TTPs) at scale. LLMs are used to analyse the shell commands executed in honeypot environments for intent, and to classify them under the MITRE Attack framework. Commands executed in real, crown-jewel, critical infrastructure are then compared against this data set as part of an automated model for detecting malicious activity. Intent analysis using LLMs significantly improves on previous text-matching approaches.

LLM-enhanced honeypots

Adel Karimi has open-sourced Galah, an LLM-powered web honeypot which can respond appropriately to a variety of HTTP requests. Adel presented his work at DEF CON 32. This is a great example of LLMs unlocking a capability in deception that would previously have been impossible.

One concern with such an approach for certain use cases is the ability for an attacker to identify that the responses are being generated by an LLM and therefore to suspect a honeypot. There might come a time where many computer systems are simply tool-enabled LLM wrappers. In the meantime, it probably makes sense to use LLMs for canaries analogously to how they are used to develop computer systems. Just as LLMs generate static code which implement systems, LLMs can generate data for, configurations of, and lures to statically-implemented, high-interaction honeypots much more efficiently and plausibly than has previously been possible.

Tracebit Canaries and LLMs

Our customers integrate our canaries via our dynamic infrastructure-as-code providers (including, for example, Terraform). This allows us to continuously change the canaries deployed alongside the underlying environment. LLMs are an important component of these adaptive canary deployments.

LLMs clearly excel at pattern recognition. What we’ve seen is they’re great at determining the apparent purpose and nature of the resources and workloads within an account, and using that to inform the suggestions. They’re very effective at conforming to a variety of different “strategies” we prompt them to employ (for instance, one such strategy might be to deploy something which looks like it might be a honeypot!). They can also be used to orchestrate longer-running “campaigns” to dynamically vary canaries over time. It’s also valuable to ask an LLM to sanity-check its own suggestions with fresh context - with and without context of deception - about its opinion on the resulting environment.

To be clear: we do not use LLMs to directly generate infrastructure-as-code! Although LLMs are great at writing Terraform, their code - like any other code - needs to be reviewed before deploying to production. Effectively granting an LLM permission to deploy infrastructure within our customers’ environments would be completely unacceptable for us and our customers.

Constrained Creativity

Instead, we’ve handcrafted a wide variety of static canary templates, which we (and our customers) have carefully reviewed to be secure to deploy within these environments, without risking giving attackers a foothold or compromising our customers’ existing controls and posture. Each such template allows for some elements to vary dynamically (for instance a name) without affecting its security posture. Within the strict guardrails imposed by such templates, we can safely allow an LLM (along with our own heuristics) some leeway in determining the dynamic parameters - subject to some additional layers of validation.

Many of our customers are widely referenced on the internet and therefore well-represented in the latent space of LLMs. This leads to interesting results, where LLMs will use their training to include references to e.g. product lines which are not mentioned elsewhere in the environment. In some cases this can be beneficial, but for the most part the environmental context is much more pertinent and should be indexed on most heavily. Often reminding the LLM of this fact is enough to get the balance right!

Protecting LLMs with deception

Canaries can present an interesting opportunity to detect potential attacks against or unexpected behaviour of LLMs themselves. As LLMs are used more widely and connected to many more systems, canaries can offer a lightweight detection for scenarios which otherwise might be very difficult to monitor. One simple example might be a dynamic canary credential included in a system prompt with strict instruction never to use or reveal it!

Conclusion

It’s clear that the world - especially the tech world - will continue to be impacted significantly by LLMs, especially as the quality continues to increase. It’s our belief that in a world of AI-enabled attacks, vibe coded systems and non-deterministic agents given more and more autonomy - the simplicity of a security canary, “We put this resource here, no-one should have read it”, becomes only more valuable.

LLMs clearly present security teams with new and varied risks to consider; but they’re also rapidly providing many new capabilities in detecting and defending against threats. Deception technology is just one example of this pace of change where LLMs are solving previously intractable problems for Tracebit and others.

Footnotes